A Fairer Future for Dating App Users

For millions of users worldwide, dating apps are more than just tools — they are social lifelines, gateways to meaningful connections, and spaces where personal identity and trust intersect. Yet, for some, the experience can abruptly turn frustrating when an account is suspended or banned. These actions, often opaque and automated, can leave users feeling alienated and unheard.

Recognizing these challenges, Hinge has taken a decisive step to redefine its account ban policies, placing fairness, transparency, and user experience at the core. This is not just a technical adjustment but a philosophical shift in how a digital platform approaches moderation. We’re exploring Hinge’s new policies, the reasoning behind them, and what it means for users and the broader dating app industry.

Why Hinge’s Shift Matters

Account bans are more than administrative actions; they have real-world consequences for users’ trust and platform engagement. In previous moderation models, users often faced sudden, unexplained suspensions, leaving them frustrated and unsure how to rectify the situation. By emphasizing fairness, Hinge seeks to:

-

Enhance transparency: Users now receive clear communication about the reasons behind a ban.

-

Reduce wrongful bans: Advanced algorithms combined with human oversight aim to prevent mistakes.

-

Rebuild trust: When users feel their concerns are acknowledged, engagement improves, and the platform’s reputation is strengthened.

Ultimately, this shift reflects a broader understanding that moderation should not just be about policing behavior; it should also support the community it serves.

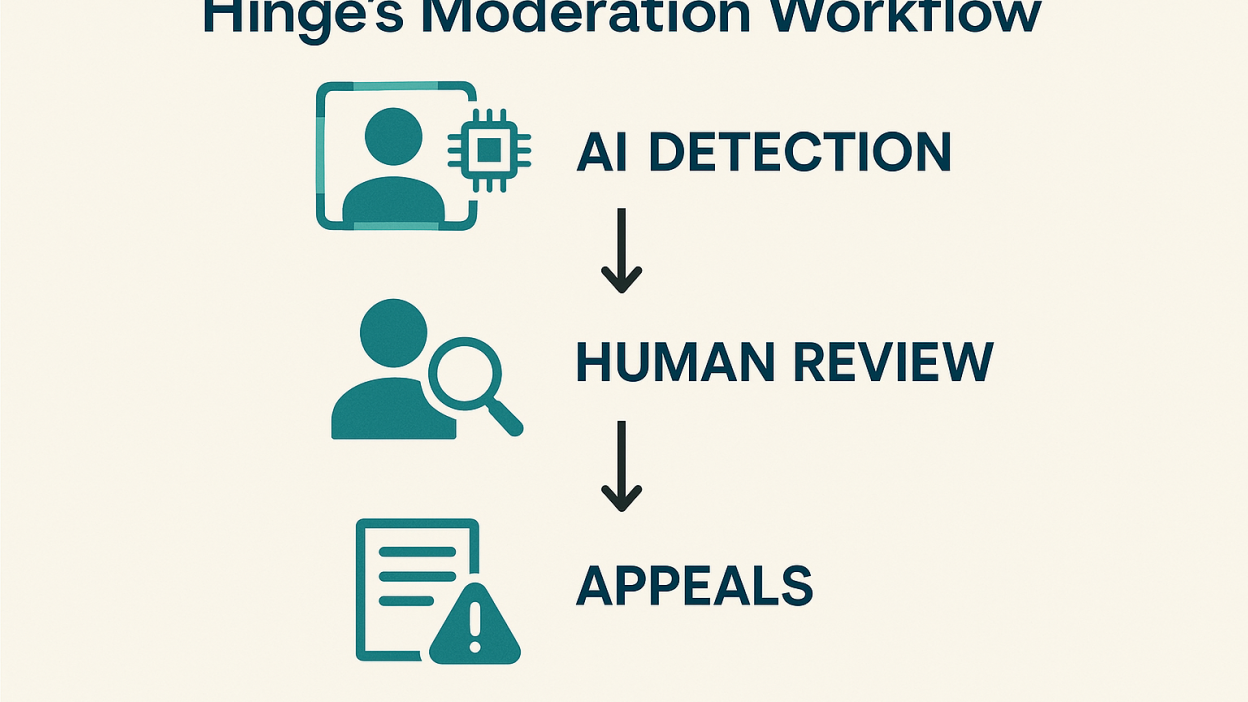

How Hinge Implements Fairness in Moderation

Hinge’s approach is multi-layered, combining AI efficiency with human judgment and user-centered processes.

Clear and Detailed Ban Notifications

Previously, bans were often delivered with minimal explanation, leaving users in the dark. Hinge now provides comprehensive notifications, outlining the specific behaviors that triggered the action and referencing the app’s community guidelines.

Providing context empowers users to understand their mistakes and learn from them, reducing frustration and negative sentiment.

Structured Appeals Process

Recognizing that moderation is not infallible, Hinge has implemented a formal appeals system. Users can contest bans, offer additional context, and request a review from human moderators.

Fairness is incomplete without recourse. The appeals process ensures that moderation is correctable, balancing automated efficiency with human empathy.

Algorithmic Oversight with Human Review

While AI scans millions of interactions for potential violations, critical cases are reviewed by trained moderators to prevent false positives.

This hybrid system ensures that automation does not override nuance, preserving fairness while maintaining scale and speed in moderation.

User Education and Preventive Measures

Hinge is also investing in educating users about acceptable behavior and providing tools to self-moderate, such as clear guidelines, tips for respectful interactions, and proactive alerts for borderline actions.

Prevention is better than punishment. By helping users navigate the rules, Hinge reduces the risk of unintentional violations, fostering a safer and more positive community.

Pros and Cons of a Fairer Moderation System

No system is perfect. Hinge’s approach has clear advantages and some trade-offs:

Pros:

-

Improves user trust and engagement

-

Minimizes wrongful bans and appeals-related frustration

-

Enhances Hinge’s reputation as an ethical, user-centric platform

Cons:

-

Human review can be resource-intensive

-

Appeals may slow down moderation for high-volume cases

-

Users could attempt to exploit the appeals system

By acknowledging these trade-offs, Hinge demonstrates responsibility and transparency, reinforcing the ethical dimensions of digital moderation.

Moderation Across Regions

Moderation policies are shaped by cultural norms, regulations, and user expectations, meaning fairness has a global dimension.

-

Europe: GDPR regulations emphasize transparency, data protection, and the right to appeal. Hinge’s new approach aligns closely with these legal standards.

-

North America: Users increasingly expect ethical moderation that balances safety with fairness, reflecting societal debates about digital accountability.

-

Asia: With rapid adoption of dating apps, moderation policies are scrutinized for both safety and inclusivity, particularly regarding harassment and misinformation.

Explanation: Hinge’s global perspective ensures that the policy changes are relevant, culturally sensitive, and legally compliant, allowing the platform to scale responsibly.

Real-World Examples

Consider a user temporarily banned for misinterpreted messages. Under the previous system, their account could remain blocked indefinitely with no explanation. Now, through Hinge’s appeals process, the user can provide context, resulting in reinstatement if the ban was unjustified.

Another example involves AI detection misflagging content as inappropriate. Human review allows moderators to correct errors, preventing unnecessary disruptions to the user experience.

These cases illustrate how fairness in moderation is practical, actionable, and deeply human, not just theoretical.

FAQs

Q1: How does Hinge define a fair account ban?

A: Fairness involves transparent communication, human oversight, and a structured appeals process to ensure decisions are accurate and just.

Q2: Can users appeal immediately?

A: Yes, Hinge provides a direct appeals channel for users to contest suspensions in a timely manner.

Q3: Will AI handle all moderation in the future?

A: AI will continue to scale detection, but human review remains critical for nuanced cases to preserve fairness and context.

Balancing Safety and Fairness

Hinge’s new approach to account bans represents a broader shift in the tech industry. Moderation isn’t just about removing bad actors — it’s about building trust, promoting fairness, and creating healthy digital communities.

By combining AI efficiency with human empathy, Hinge is positioning itself as a model for responsible moderation in dating apps, showing the industry that fairness and safety can coexist.

Want more insights on dating app trends and digital fairness?

Subscribe to our newsletter for weekly updates on AI, user experience, and platform ethics.

Note: All logos, trademarks, and brand names referenced herein remain the property of their respective owners. The content is provided for editorial and informational purposes only. Any AI-generated images are illustrative and do not represent official brand assets.