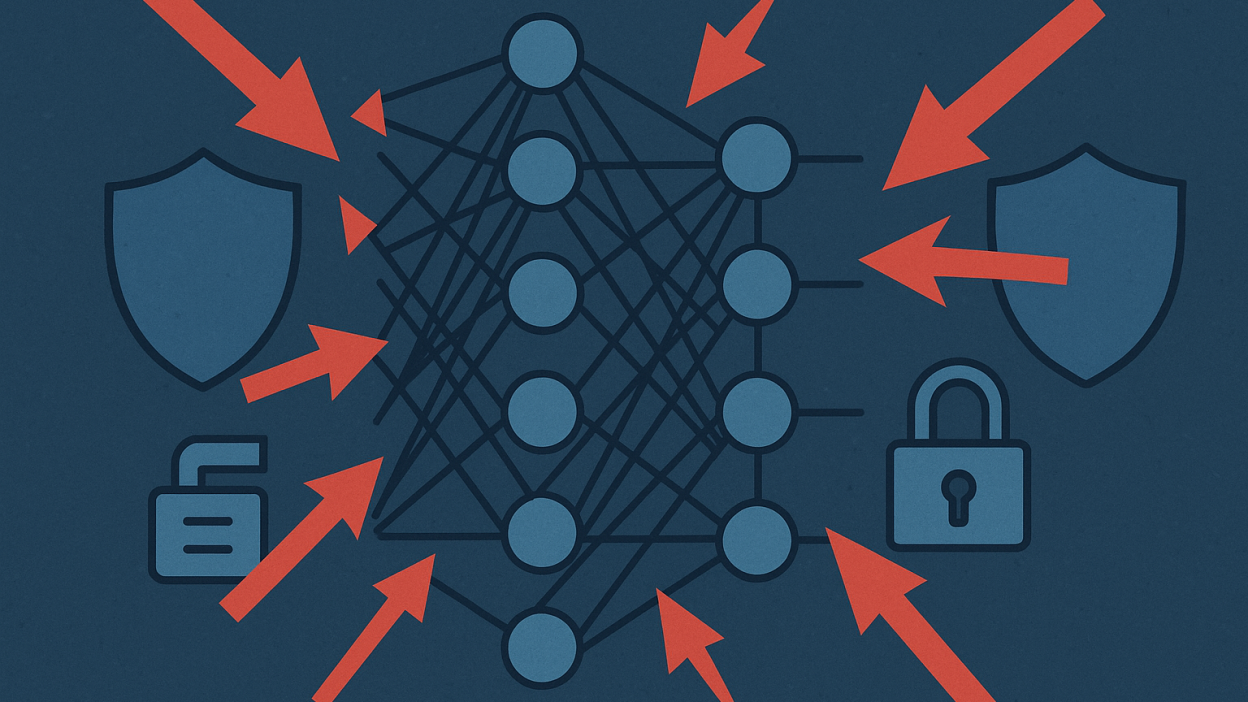

Security in AI: Adversarial-Proofing Machine Learning Systems for a Safer Future

Artificial intelligence (AI) systems have become integral to modern life. From powering recommendation engines and autonomous vehicles to assisting in healthcare and financial decision-making, machine learning models are increasingly trusted to interpret data, guide actions, and even influence outcomes. However, this growing dependence comes with profound vulnerabilities. AI systems, though sophisticated, are not immune to malicious manipulation—particularly adversarial attacks that exploit model weaknesses to produce incorrect or harmful outputs.

Adversarial attacks are more than theoretical risks. Researchers have demonstrated how subtle perturbations to images, audio signals, or data inputs can trick AI into misclassifying objects, recommending unsafe actions, or leaking sensitive information. The implications span from security breaches and privacy violations to life-threatening failures in critical systems like healthcare or autonomous driving.

This makes adversarial-proofing AI systems not just a technical necessity but a societal imperative. As AI integrates deeper into everyday processes, ensuring its resilience against manipulation becomes essential to maintaining trust, fairness, and safety. Adversarial-proofing is about more than algorithms—it’s about safeguarding human well-being.

The relevance of this topic cannot be overstated. Governments, enterprises, and research institutions are investing heavily in securing AI frameworks, while developers are increasingly aware that adversarial robustness must be built from the ground up. In this article, we’ll explore how adversarial attacks work, real-world examples, current defenses, and actionable strategies to create more secure and trustworthy AI systems—empowering users and protecting society at large.

Understanding Adversarial Attacks: How AI Can Be Manipulated

Adversarial attacks are deliberate attempts to fool AI systems by making subtle changes to input data. Unlike random noise, adversarial perturbations are carefully crafted to exploit vulnerabilities in machine learning models, often bypassing human detection while causing systems to produce erroneous results.

Types of Adversarial Attacks

- Evasion Attacks:

Attackers manipulate input data to cause incorrect predictions or classifications at inference time. For instance, slightly altering an image so that a self-driving car’s vision system misidentifies a stop sign as a speed limit sign. - Poisoning Attacks:

Malicious actors insert manipulated data into training datasets, degrading model performance or introducing biases that can be exploited later. - Model Inversion Attacks:

Attackers attempt to reconstruct sensitive data from model outputs, compromising privacy and exposing confidential information. - Membership Inference Attacks:

By probing models, attackers can determine if specific data points were used during training, raising privacy concerns.

Why Are AI Models Vulnerable?

- High Dimensionality:

Neural networks operate in vast, complex data spaces where small perturbations can drastically alter outcomes. - Overfitting:

Models that overfit on training data are less generalizable and more susceptible to adversarial manipulation. - Gradient Sensitivity:

Attackers often exploit gradients in loss functions to craft adversarial examples that push models toward incorrect decisions.

Human Perspective

For everyday users, adversarial attacks might seem distant, but their consequences are tangible. Misdiagnosed medical images, manipulated financial systems, and compromised personal data are real threats. Developers must recognize that adversarial vulnerabilities are not flaws—they are design challenges requiring proactive solutions. The human cost of ignoring adversarial threats could be catastrophic.

Real-World Impacts: When AI Systems Fail Under Attack

Adversarial attacks are no longer confined to academic research—they have been demonstrated across industries, sometimes with alarming implications.

Healthcare Systems

AI models used for disease diagnosis and imaging analysis are vulnerable to adversarial perturbations. In one study, researchers introduced imperceptible noise to skin lesion images, causing AI systems to misclassify malignant tumors as benign. The human cost of such errors could be life-threatening.

Autonomous Vehicles

Self-driving cars rely on computer vision to interpret road signs and detect pedestrians. Even slight alterations to stop signs—like stickers or paint—can lead to disastrous misinterpretations, putting lives at risk.

Financial Services

Fraud detection algorithms that flag suspicious transactions can be manipulated with carefully crafted transaction patterns. Attackers can bypass security measures, leading to financial loss and identity theft.

Voice Assistants

Voice-controlled devices like smart speakers have been tricked using adversarial audio signals that are inaudible to humans but mislead AI-driven voice recognition systems.

Content Moderation

Platforms relying on AI to filter harmful content can be circumvented by adversarially modified images or text, allowing harmful or extremist content to evade automated detection.

Human Reflection

These examples reveal how AI’s weaknesses are exploited not only by sophisticated hackers but also by opportunists seeking to bypass safeguards. As AI continues to handle critical tasks, ensuring its resilience against adversarial interference is not optional—it’s an ethical obligation. Users expect technology to assist them, not endanger them, and adversarial-proofing is central to earning and maintaining that trust.

Techniques for Adversarial-Proofing AI Systems

Defending against adversarial attacks requires a multi-layered approach that combines model robustness, secure training practices, and ongoing evaluation.

Adversarial Training

Adversarial training involves augmenting training datasets with adversarial examples. By exposing the model to manipulated inputs during training, it becomes better at recognizing and resisting adversarial perturbations during deployment.

Example:

Image classifiers are trained on both clean and adversarial images, allowing the model to learn how to distinguish noise patterns from authentic inputs.

Defensive Distillation

Defensive distillation smoothens the decision boundaries of neural networks by training the model to output probability distributions instead of hard classifications. This reduces sensitivity to small changes and makes it harder for attackers to exploit gradients.

Gradient Masking

This technique hides or obfuscates gradients, making it more difficult for attackers to calculate how to manipulate inputs effectively.

Caution:

While useful, gradient masking is not foolproof and should be combined with other defense strategies.

Robust Regularization

Regularization techniques penalize model complexity, reducing overfitting and encouraging generalization. Models trained with robust regularization are less likely to respond erratically to adversarial inputs.

Input Preprocessing

Filtering or denoising inputs before feeding them into the model helps eliminate adversarial noise. Techniques like JPEG compression, smoothing filters, or random transformations are common.

Monitoring and Response Systems

Continuous monitoring allows models to detect suspicious input patterns and trigger alerts or fallback mechanisms. Automated response systems can block inputs that exhibit adversarial characteristics.

Human-in-the-Loop Systems

Where the stakes are high—such as in healthcare or finance—human oversight is critical. Systems should flag uncertain predictions and defer decisions to human experts when confidence is low.

Human Reflection

These defense strategies underscore that adversarial-proofing is not a one-time fix—it’s an ongoing process that demands vigilance, creativity, and ethical foresight. Implementing robust defenses requires cooperation between engineers, ethicists, and end-users to ensure AI serves humanity’s best interests without compromising safety.

Frameworks and Tools for Adversarial Defense

Several frameworks and tools are helping developers design AI models that are more resilient to adversarial attacks.

TensorFlow Privacy and Robustness Packages

TensorFlow offers libraries that include privacy-preserving algorithms and adversarial training utilities. Developers can integrate adversarial robustness into existing workflows using these tools.

PyTorch’s Adversarial Training Framework

PyTorch’s ecosystem includes adversarial examples generation methods, loss functions, and defenses, allowing researchers to test and refine models in adversarial settings.

CleverHans Library

A Python library focused specifically on adversarial machine learning. It provides standardized algorithms for generating attacks and testing robustness across models.

Adversarial Robustness Toolbox (ART)

Developed by IBM, ART offers an open-source collection of tools for adversarial attacks and defenses. It supports models built with TensorFlow, PyTorch, and scikit-learn.

Open Datasets and Benchmarks

Initiatives like the NIPS Adversarial Challenge and various adversarial datasets allow teams to benchmark models and improve defenses collaboratively.

Human Reflection

The availability of robust tools helps democratize adversarial defense, ensuring that even small organizations can protect AI systems. However, tools are only as effective as the expertise guiding their use. Ethical decision-making, data governance, and transparency must accompany technical defenses to build truly resilient AI ecosystems.

The Future of Adversarial Security in AI

As AI continues to expand across industries, adversarial-proofing will remain a cornerstone of secure and ethical technology deployment.

Integrating Security at the Design Stage

Security can no longer be an afterthought. Model development processes are increasingly emphasizing “security by design,” where adversarial robustness is integrated from the earliest architecture choices.

Collaborative Research Networks

With adversarial threats constantly evolving, cross-industry collaboration is essential. Organizations are sharing insights and developing standards that promote secure AI without monopolizing knowledge.

Ethical AI Governance

Adversarial-proofing efforts will be intertwined with discussions on fairness, bias mitigation, and algorithmic transparency. Ethical frameworks will guide how models are trained and deployed responsibly.

AI Regulation and Certification

We can expect future certifications akin to cybersecurity standards that evaluate AI robustness. Governments and regulatory bodies are exploring frameworks that balance innovation with public safety.

Human Empowerment through Secure AI

Ultimately, adversarial-proofing empowers users to trust AI in critical tasks—whether managing health, finances, or personal data. By securing models against manipulation, we reinforce AI’s role as a tool that augments human decision-making, not undermines it.

Human Reflection

The journey toward adversarial security is as much about preserving human dignity as it is about improving algorithms. Users must feel confident that the systems they rely on are designed with their best interests in mind, not vulnerable to exploitation. Trust is earned through transparency, collaboration, and ethical responsibility.

Adversarial attacks expose the hidden vulnerabilities of AI systems, challenging developers to rethink how models are trained, tested, and deployed. From healthcare diagnostics to autonomous vehicles, AI’s susceptibility to manipulation poses significant risks—not only technical but deeply human. Adversarial-proofing machine learning systems is a collective responsibility that spans developers, researchers, regulators, and users alike.

By integrating robust defenses like adversarial training, gradient masking, and input preprocessing, alongside ethical frameworks and human oversight, we can build AI systems that are both powerful and trustworthy. The future of AI depends not only on its accuracy but on its resilience—on how well it can withstand malicious intent while continuing to serve humanity’s needs.

The implications of adversarial-proofing go beyond security; they touch on transparency, fairness, and user confidence. In a world increasingly shaped by intelligent systems, safeguarding AI against adversarial threats ensures that technology empowers rather than endangers us. As we embrace innovation, we must also embrace responsibility, recognizing that security is not an add-on—it is foundational to the safe, equitable future we aspire to build together.

FAQs

1. What is an adversarial attack in AI?

An adversarial attack is a deliberate manipulation of input data designed to trick AI models into making incorrect predictions or decisions without raising suspicion.

2. Why are AI systems vulnerable to adversarial attacks?

AI models, particularly deep learning systems, operate in high-dimensional spaces where small perturbations can significantly influence outputs. Overfitting, gradient sensitivity, and lack of robustness make them susceptible.

3. How does adversarial training help improve model security?

Adversarial training exposes models to manipulated inputs during the learning phase, teaching them to recognize and resist adversarial perturbations during real-world use.

4. Can adversarial-proofing completely eliminate threats?

No. While adversarial defenses reduce risk, no system is entirely immune. Continuous monitoring, updated defenses, and human oversight are necessary for sustained protection.

5. What industries benefit from adversarial defenses?

Healthcare, finance, transportation, security, and communication industries benefit the most as they rely on sensitive data and critical decision-making processes.

6. How does adversarial-proofing align with data privacy laws?

By limiting exposure of personal data and ensuring secure model training, adversarial defenses support compliance with privacy regulations like GDPR and CCPA.

7. What role do ethical considerations play in adversarial-proofing?

Ethical AI governance ensures that defenses are designed not only to prevent manipulation but also to uphold fairness, transparency, and trustworthiness in technology deployment.

Stay at the forefront of AI security and ethical innovation. Subscribe to our newsletter for expert insights, case studies, and practical advice on building adversarial-resistant, trustworthy AI systems that serve humanity with integrity.

Note: Logos and brand names are the property of their respective owners. This image is for illustrative purposes only and does not imply endorsement by the mentioned companies.