The Cost of AI Innovation

Artificial intelligence has transformed industries from healthcare to finance to entertainment, but this progress comes at a steep price. Running large-scale AI models through APIs often requires significant computational resources, which translates into high operational costs. For startups, small businesses, and even large enterprises, these costs can limit experimentation and adoption of AI technology.

Enter DeepSeek, a pioneering AI company that has just launched a sparse attention model designed to reduce API costs by up to 50%. Unlike traditional attention mechanisms, which analyze every possible interaction in a dataset, sparse attention focuses only on the most relevant data points, drastically improving efficiency. This innovation could democratize access to AI, allowing more organizations to leverage advanced models without prohibitive costs.

In this article, we explore what sparse attention is, why it matters, and how DeepSeek’s approach could reshape AI adoption globally.

Understanding Sparse Attention

At the heart of modern AI models, particularly in natural language processing and transformer-based architectures, is the attention mechanism. This system allows the model to determine which parts of the input are most relevant to the task at hand. However, traditional attention evaluates all relationships within the input, which can be computationally expensive, especially for long sequences.

Sparse attention solves this by selectively focusing only on key relationships, ignoring less critical data.

-

Efficiency Gains: By not processing every possible connection, the model runs faster and requires fewer resources.

-

Cost Reduction: Less computation directly translates to lower API usage fees.

-

Scalability: Developers can process larger datasets or serve more requests without a proportional increase in cost.

Essentially, sparse attention allows AI to maintain performance while being leaner and more sustainable—both financially and environmentally.

Why DeepSeek’s Innovation Matters

Lower Operational Costs

For developers and businesses using AI APIs, operational costs are often the biggest barrier to scaling. DeepSeek’s sparse attention model reduces these costs by half, enabling:

-

Startups to experiment freely without expensive infrastructure.

-

Enterprises to deploy AI at scale without exceeding budgets.

-

Increased accessibility for organizations in emerging markets where high computational costs can be prohibitive.

Improved Performance

Sparse attention not only cuts costs but also enhances speed and responsiveness. By processing only relevant data, AI applications can deliver results faster—critical for real-time systems such as chatbots, recommendation engines, and financial analytics.

Environmental Benefits

High-computation AI models consume significant energy. By reducing unnecessary processing, sparse attention lowers energy consumption, contributing to greener AI practices. Organizations adopting such models can simultaneously reduce costs and their carbon footprint.

Practical Applications of Sparse Attention

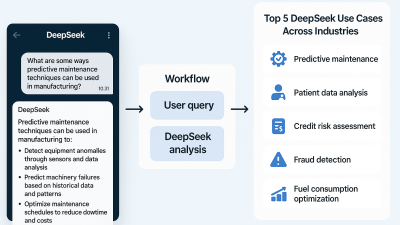

Conversational AI

Chatbots and virtual assistants can now manage longer conversations or multiple simultaneous users efficiently. Sparse attention ensures that AI focuses on key parts of a conversation without overloading the system.

Data-Intensive Analysis

Industries like finance, healthcare, and research generate vast datasets. Sparse attention allows AI to analyze extensive data sequences without incurring the high costs typically associated with large models.

Multimodal AI Systems

Models processing multiple types of data—text, images, audio—benefit significantly from sparse attention. The system prioritizes cross-modal interactions, avoiding computational bottlenecks and delivering faster, accurate results.

Pros and Cons

Advantages

-

Significant Cost Savings: Up to 50% reduction in API costs.

-

Faster, More Scalable Models: Enables real-time AI applications at scale.

-

Environmentally Friendly: Lower energy usage for the same tasks.

Potential Drawbacks

-

Accuracy Trade-offs: Sparse models may miss minor, but relevant, patterns in complex datasets.

-

Learning Curve: Developers may need to adapt existing architectures to fully leverage sparse attention.

-

Integration Challenges: Current APIs and pipelines may require updates for compatibility.

Despite these considerations, the overall impact of sparse attention is overwhelmingly positive for efficiency and scalability.

Global Industry Perspective

-

Cost Efficiency Trend: Organizations worldwide are seeking AI solutions that balance performance and affordability. Sparse attention aligns perfectly with this demand.

-

Emerging Markets: High computational costs often limit AI adoption in countries like India, Brazil, and parts of Africa. Efficient models like DeepSeek’s could enable broader access.

-

Competitive Advantage: Companies implementing cost-effective AI models can innovate faster, scale services, and gain an edge over competitors.

The economic implications are substantial: reduced API costs not only enable new applications but also accelerate global AI adoption.

FAQs

Q1: How does sparse attention reduce computational costs?

By evaluating only the most relevant relationships in the data, sparse attention minimizes unnecessary computations, lowering resource use and API costs.

Q2: Does sparse attention compromise model accuracy?

In most cases, carefully designed sparse attention models maintain performance, though some niche tasks may see minor trade-offs.

Q3: Who benefits most from DeepSeek’s model?

Startups, SaaS platforms, and large enterprises relying on API-driven AI services will see immediate advantages in cost, speed, and scalability.

Q4: Is sparse attention suitable for all AI models?

While transformer-based models benefit the most, smaller or specialized models may see limited gains.

A Milestone in AI Efficiency

DeepSeek’s sparse attention model is more than a technical improvement—it is a strategic innovation with global implications. By halving API costs, it enables startups, enterprises, and developers to scale AI applications more efficiently, affordably, and sustainably.

Key Takeaways:

-

Cost savings make AI accessible to more organizations.

-

Efficiency gains improve real-time application performance.

-

Reduced energy consumption contributes to greener AI practices.

Looking Ahead:

Sparse attention could become a standard in AI architectures, transforming how models are deployed globally. As AI adoption continues to expand, innovations like DeepSeek’s pave the way for a future where powerful AI is not just accessible, but sustainable and efficient.

Subscribe to our newsletter for weekly insights on AI breakthroughs, cost-efficient models, and the future of machine learning.

Note: All logos, trademarks, and brand names referenced herein remain the property of their respective owners. The content is provided for editorial and informational purposes only. Any AI-generated images are illustrative and do not represent official brand assets.