Drawing the Digital Line: How ChatGPT Handles Suicide Conversations Differently for Teens and Adults

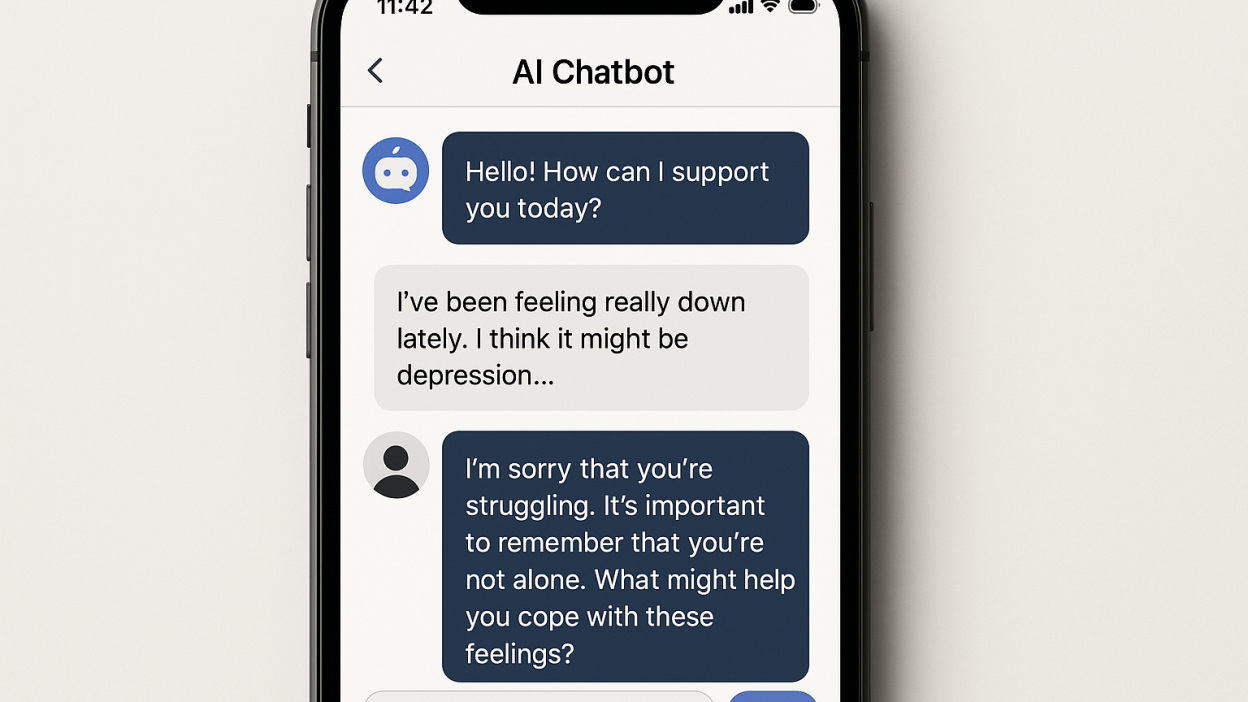

Artificial Intelligence is increasingly becoming a central part of daily life, from workplace productivity tools to personal digital assistants. Among these, ChatGPT—a sophisticated conversational AI—has gained global attention for its ability to answer questions, provide guidance, and simulate human-like interactions. Yet, as AI evolves, so too does the responsibility it carries. One of the most sensitive and debated areas is how AI handles conversations around suicide. Recently, Sam Altman, CEO of OpenAI, emphasized that ChatGPT will stop engaging in suicide-related conversations with teenagers—a policy shift highlighting the nuanced ethical landscape of AI.

The decision raises important questions: Why are teens treated differently than adults? How does AI balance offering support while avoiding harm? Suicide is a leading cause of death among teenagers worldwide, making early intervention vital. However, AI lacks the nuanced judgment of trained mental health professionals and must navigate these discussions carefully.

From a human perspective, this policy reflects the broader societal challenge of safeguarding vulnerable populations in a digital age. AI interactions can provide comfort, but they are not substitutes for human empathy, professional care, or community support. By drawing this “digital line,” ChatGPT exemplifies the delicate balance between accessibility, safety, and responsibility—a balance with profound implications for both teens and adults in a world where AI is increasingly trusted with personal struggles.

The Ethical Imperative Behind AI Restrictions

AI’s ability to respond to sensitive topics is both a technological marvel and a moral quandary. The restriction on teen suicide conversations stems from a clear ethical imperative: protecting minors from guidance that could unintentionally exacerbate their distress. Adolescents are neurologically and emotionally in a developmental stage that makes them more vulnerable to risk and influence.

OpenAI’s approach demonstrates an understanding of these vulnerabilities. By limiting direct discussions with teens about suicide, ChatGPT avoids scenarios where it might provide incomplete or misinterpreted advice. This preventive measure aligns with broader societal practices, such as age-appropriate counseling, parental guidance, and regulatory protections in online spaces.

From a human-centered viewpoint, this is a reflection of our instinct to safeguard those most at risk. While AI can offer resources and empathetic communication, it cannot replace the nuanced judgment of a human trained to handle mental health crises. In effect, ChatGPT’s boundary represents a digital manifestation of ethical caution, balancing innovation with responsibility.

Adult Conversations: Empowering with Caution

For adults, ChatGPT’s policies are more permissive, allowing discussions about suicide with appropriate safeguards. Adults are generally considered capable of processing complex information, evaluating risks, and seeking professional help when needed. AI can provide valuable resources, coping strategies, and empathetic engagement in a way that supplements human support systems.

The adult-centric approach also incorporates disclaimers, resource referrals, and safety prompts, ensuring users are guided toward qualified help rather than relying solely on AI. For instance, ChatGPT can offer crisis hotline numbers, outline steps for creating a safety plan, or provide strategies to manage stress. These measures empower adults without overstepping ethical boundaries, highlighting how AI can augment mental health support responsibly.

Real-world implications are significant. Many adults experience barriers to traditional mental health services—be it stigma, access, or cost. ChatGPT offers a low-threshold point of engagement, potentially bridging the gap between need and professional care. Yet, it remains critical to emphasize that AI is a facilitator, not a replacement, underscoring the importance of human empathy and professional expertise in managing life-threatening situations.

Lessons from Digital Interventions

Evidence from digital mental health tools illustrates the delicate balance between support and risk. For example, studies have shown that chatbots providing cognitive behavioral therapy (CBT) techniques can reduce symptoms of anxiety and depression in adults. However, when similar interventions are applied to teens without supervision, outcomes can be inconsistent and, in rare cases, harmful.

One illustrative case involved an AI-driven mental health app targeting adolescents. While many users reported relief and guidance, some misinterpreted suggestions, highlighting the limits of unsupervised digital intervention. This underscores why ChatGPT restricts teen suicide conversations: AI lacks the ability to assess situational context, emotional cues, and immediate risk accurately for younger users.

Conversely, adult users have benefited from AI-assisted interventions. For example, adults in remote areas have used AI chat tools to access coping strategies during periods of acute stress, demonstrating how properly guided AI can extend the reach of mental health resources. These real-world cases emphasize that the age-based approach is grounded not in exclusion but in evidence-based safety considerations.

Societal Implications of Age-Based AI Policies

The decision to differentiate AI responses by age has broader societal consequences. It raises questions about digital literacy, the responsibilities of technology providers, and the role of AI in public health. Teenagers, who are often digitally native, rely heavily on online interactions for social and emotional support. By restricting certain AI conversations, OpenAI signals the importance of integrating human supervision, parental involvement, and professional guidance in digital spaces.

For adults, the policy encourages responsible AI engagement, reminding users that technology can supplement but not replace traditional support systems. It also fosters awareness around mental health, normalizing help-seeking behaviors and providing immediate access to resources.

From a societal perspective, these policies may influence regulatory approaches to AI, shaping expectations around ethical responsibility, risk mitigation, and age-appropriate access. They exemplify the broader challenge of balancing technological innovation with public safety in an increasingly AI-driven world.

Practical Advice for Safe AI Engagement

While AI can be a supportive tool, it is essential to use it responsibly. Here are practical considerations:

-

Know the Limits: Recognize that AI provides guidance, not professional counseling. Always seek trained support for mental health crises.

-

Utilize Resources: When discussing sensitive topics with adults, ChatGPT can provide hotline numbers, coping strategies, and references to credible mental health organizations.

-

Supervision Matters for Teens: For adolescents, adult guidance—whether parents, teachers, or counselors—is crucial when navigating emotional challenges.

-

Promote Digital Literacy: Educating users about how AI functions, its capabilities, and its boundaries enhances safe and effective interactions.

-

Combine Human Support: AI should augment, not replace, face-to-face therapy, support groups, or community networks.

These guidelines highlight the human-centered approach required when integrating AI into sensitive areas like mental health, ensuring technology serves as a complement rather than a substitute.

Future Directions for AI in Mental Health

The field of AI-assisted mental health is rapidly evolving. Future iterations of ChatGPT and similar tools may include:

-

Enhanced risk detection algorithms that better assess context and emotional cues.

-

Age-adaptive interfaces that dynamically tailor guidance while maintaining safety.

-

Seamless integration with human counselors for real-time intervention.

-

Continuous ethical audits and updates to align AI behavior with evolving mental health research.

These developments illustrate a promising trajectory: AI that respects boundaries, safeguards vulnerable populations, and amplifies human capacity for empathy and intervention. The digital line drawn today may evolve into a sophisticated framework where AI responsibly supports users of all ages.

Drawing the digital line between teen and adult engagement reflects a critical evolution in AI ethics and practical application. By restricting suicide-related conversations with adolescents, ChatGPT acknowledges the unique vulnerabilities of younger users while promoting safety and responsible usage. For adults, the AI offers guided support, resources, and empathetic interaction, empowering users without replacing professional care.

This approach underscores the broader societal challenge of integrating AI into emotionally sensitive domains. It illustrates how technology can complement human support systems while respecting ethical boundaries. The age-based differentiation not only protects teens but also educates users about responsible AI interaction, bridging the gap between innovation and human-centered care.

Ultimately, the policy invites reflection on our collective responsibility: designing AI that is helpful, safe, and socially conscious. It serves as a reminder that while AI can provide guidance, empathy, and information, human judgment, professional intervention, and community support remain irreplaceable pillars in addressing mental health crises. In navigating this digital frontier, ChatGPT exemplifies the balance between empowerment and ethical caution.

FAQs

1. Why does ChatGPT restrict suicide conversations for teens?

To protect minors from potential harm, as AI cannot fully assess their emotional and situational context.

2. Can adults talk to ChatGPT about suicide?

Yes, with safeguards, disclaimers, and referrals to professional resources.

3. Does ChatGPT replace mental health professionals?

No. It supplements support but cannot provide professional counseling or crisis intervention.

4. What resources does ChatGPT provide for adults in crisis?

Hotline numbers, coping strategies, safety planning guidance, and referrals to mental health organizations.

5. How can teens safely use AI for emotional support?

Under adult supervision, using AI for general guidance while seeking professional or parental support.

6. Will ChatGPT’s policies evolve over time?

Yes, OpenAI continuously updates AI behavior based on research, ethics, and user safety.

7. How can society ensure safe AI usage in mental health?

Through education, supervision, ethical guidelines, and combining AI with professional care.

Stay informed about AI ethics, digital safety, and mental health innovations. Subscribe to our newsletter for expert insights, updates, and responsible technology guidance.

Note: Logos and brand names are the property of their respective owners. This image is for illustrative purposes only and does not imply endorsement by the mentioned companies.